Not all statement-of-work (SOW) engagements are the same. One size does not fit all. This is why SOW engagements must be tailored appropriately so effective management efforts can be deployed. Certainly, there are some universal management framework best practices that can be applied across one’s entire SOW engagement spend activity. But important differences from engagement to engagement type require attention and “tailoring” in the execution management approach. A good example: the differences between SOW projects and SOW services.

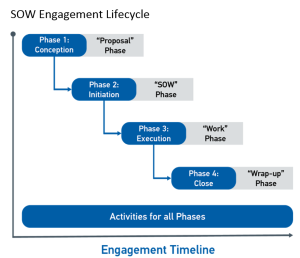

With SOW projects, each project and its subsequent deliverable is unique. A software development project only requires one data model. An infrastructure project only requires one distinctive network diagram or series of them. The deliverables in these kinds of SOW engagements support each other as one moves through the SOW Engagement Lifecycle phases and are used to describe the end product – which is the purpose of the project.

SOW service deliverables are iterative, resolution of an issue or fulfillment of a request. The SOW solution provider responds to them as they come along and they aren’t individually defined in advance, although the kinds of issues and requests are usually defined by the scope of the service.

SOW projects are different from services in that services are ongoing, often routine work. A support service desk is a solid example of this and something we’re all familiar with. Calls are answered and emails received, service tickets are opened, and issues are resolved. The success of the service is measured by performance indicators (how quickly we answer the phone, resolve issues, etc.) which are measurable and are usually expected to improve over time.

A project is very different from a service. Hence, from this basic perspective, one can’t expect to manage all SOW engagements the same way. SOW engagement management tailoring begins with the statement-of-work document (tasks and deliverables are certainly tailored to fit the purpose of the engagement) and extends through all phases of the SOW Engagement Lifecycle.

Consider two different kinds of engagements as further examples. One project (finite work effort and final deliverable or deliverables) and one SOW service (ongoing work effort and a stream of deliverables which indicate the quality of the service being provided).

Infrastructure Project. An infrastructure project has an end result (a new infrastructure, upgraded or expanded infrastructure, etc. – including workstation refreshes; ERP, accounting, email, and other system installs or upgrades; network infrastructure installs or upgrades, etc.) and occurs over a fixed period of time. This project activity example is not ongoing; the core project goal is to finish them, to get to the end deliverable. Project success is measured by completing the project on time, within budget, and by delivering a high quality product and supporting deliverables.

Software Maintenance. Software maintenance is an SOW engagement service. As business needs change, the software used to run the business needs to be changed and this is software maintenance. Like a support service desk, the service usually responds to requests in the form of tickets and is evaluated by measurable performance indicators (in this case the speed of response to a request; the quality of the software change, often measured in terms of software defects, etc.).

So how do these differ? They differ in pricing, in content characteristics, in the form of delivery/deliverable and engagement timeframe (one ongoing and the other a certain completion date). The most definitive difference is the deliverable characteristics of each engagement, which will drive different requirements in the metrics use to measure successful accomplishments of each engagement.

There are natural differences in the execution phase for each of these SOW engagement types. Software development projects conforms to an SDLC (systems development life cycle) approach and development lifecycles consist of analysis, design, construction, etc. phases. Infrastructure projects are similar, although executing them typically doesn’t require as many phases. Services, on the other hand, are iterative in the engagement execution phase. Once an SOW solution provider has ramped up the service, and perhaps transitioned it from another provider, what is done during execution is not phased, but iterative – usually by responding to tickets and requests, and reporting service quality monthly and quarterly. Although each of these issue resolutions and requests are, in theory, is a deliverable, what is thought of as a deliverable for a service are the SLA reports – the objective, quantitative and qualitative measures of the service provided on an ongoing basis.

Additional execution management tailoring will continue beyond the project/service type segmentation for SOW engagements depending on their complexity and involvement of multiple delivery partners for a specific targeted SOW deliverable. This is not to say that there are no comparable engagement metrics that can be used to measure performance across the entire SOW spend category, but execution management of certain SOW engagement types will require some best practice management tailoring to drive and produce the desired SOW results.