The US Equal Employment Opportunity Commission has recorded its first-ever settlement in a case involving AI-enabled age discrimination in the workplace.

According to a joint legal filing in a New York federal court, China-based iTutorGroup Inc. — which provides English-language tutoring services to students in China — agreed to pay $365,000 to approximately 200 people who applied for jobs in March and April 2020.

The Aug. 9 filing settles an EEOC lawsuit which charged that the company’s AI-powered online recruitment software automatically rejected older applicants, violating the federal Age Discrimination in Employment Act.

iTutorGroup hires thousands of US-based tutors annually to provide online instruction from their homes or other remote locations, according to the EEOC. The lawsuit alleged that iTutorGroup programmed its software to automatically reject female applicants age 55 or older and male applicants age 60 or older. As a result, iTutorGroup rejected more than 200 qualified applicants based in the US because of their age, the EEOC claimed.

The May 2022 lawsuit was EEOC’s first involving a company’s use of AI to make employment decisions, Reuters reported, but experts expect more will be filed that accuse employers of using AI software to discriminate. For example, in a pending proposed class action in California federal court, Workday is accused of designing hiring software used by scores of large companies that screens out Black, disabled and older applicants. Workday has denied wrongdoing, according to Reuters.

The proliferation of AI in hiring practices has prompted the EEOC and other federal agencies to entreat Congress to enact legislation to address existing state and municipal laws to protect candidates from AI-based discrimination, writes Todd Keebaugh, executive VP of strategy and general counsel at Eliassen Group, in Staffing Industry Review magazine. To abide by existing laws, he advises that employers should implement written policies governing the use of AI-enabled tools for hiring and should supplement existing written information security policies to address the storage, handling and disposal of collected data.

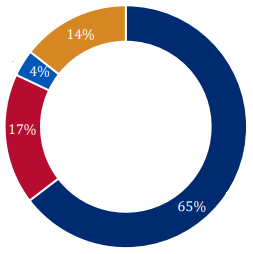

“We expect to see more legal actions and more settlements because the use of AI in employment settings is exploding,” attorneys Raeann Burgo and Wendy Hughes of law firm Fisher Phillips wrote in a blog post. “Approximately 79% to 85% of employers now use some form of AI in recruiting and hiring, and that number will surely increase. Given this exponential rise, employers are bound to have questions about compliance best practices.”