The EU’s Artificial Intelligence Act, unanimously approved by the 27 member states in March, will apply to any AI system used or providing outputs within the EU regardless of where the provider or user is located. The AI Act is the first legal framework on AI globally and will inevitably provide a blueprint for other jurisdictions.

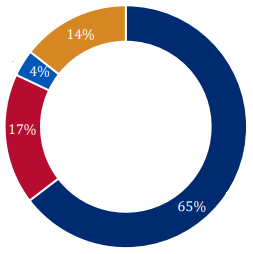

The AI Act takes a risk-based approach to regulating AI, classifying AI technologies according to the level of risk they pose to fundamental rights, freedoms, and health and safety of individuals. It imposes obligations on providers and users depending on the potential risks and level of impact from AI tools according to four risk classifications:

- Unacceptable risk AI systems are systems considered a threat to people and will be banned. They include social scoring such as classifying people based on behavior, socioeconomic status or personal characteristics.

- High risk AI systems that negatively affect safety or fundamental rights will have to be registered in an EU database. These include systems used in employment, worker management and access to self-employment. All high-risk AI systems will have to be assessed before being put on the market and throughout their lifecycle. General purpose and generative AI, like ChatGPT, will have to comply with transparency requirements.

- Limited risk AI systems will have to comply with minimal transparency requirements that would allow users to make informed decisions. For instance, when using AI systems such as chatbots, humans should be made aware that they are interacting with a machine so they can decide to continue or not.

- Minimal risk systems are those that do not pose risks and can continue to be used without being regulated or affected by the EU’s AI Act. This includes applications such as AI-enabled video games or spam filters. While many AI systems pose minimal risk, they will need to be assessed.

The types of tools used in recruitment — such as facial recognition, matching and performance-tracking tools — will be considered high risk. Such AI systems are permitted but will be subject to strict compliance obligations.

High-risk AI systems will be subject to strict obligations before they can be put on the market:

- adequate risk assessment and mitigation systems;

- activity logs to ensure traceability of results;

- detailed documentation providing all information necessary on the system and its purpose for authorities to assess its compliance;

- clear and adequate information to the user of the tool;

- appropriate human oversight measures to minimize risk; and

- a high level of robustness, security and accuracy.

Additionally, the datasets feeding the system will need to be of a high quality to minimize risks and discriminatory outcomes.

All remote biometric identification systems are considered high risk and subject to strict requirements. Organizations will be required to make it clear if a system uses emotion recognition or biometric classification and notify users if image, audio or video content has been generated or manipulated by AI to falsely represent its content — for example, an AI-generated video showing a public figure making a statement that was, in fact, never made. The requirement to create such awareness will apply to systems in all risk categories.

The AI Act now contains a revised definition of “AI systems” that is aligned with the OECD definition as “a machine-based system designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations or decisions that can influence physical or virtual environments.”

The approved text must be formally adopted by the Council of Ministers before it can become EU law. The AI Act will then enter into force on the 20th day after publication in the EU Official Journal. The complete set of regulations are expected to become law in May, with some provisions enforceable within six to 12 months and the remaining provisions in 24 months. Member states will also need to appoint national competent authorities to oversee its implementation in their jurisdictions.

Enforcement could include fines of up to €35 million (US$32.8 million) or 7% of global revenue, whichever is the higher, making penalties even heftier than those incurred by violations of GDPR. The use of prohibited systems and the violation of the data-governance provisions when using high-risk systems will incur the largest potential fines. All other violations are subject to a lower maximum of €15 million (US$21.8 million) or 3% of global revenue, while providing incorrect or misleading information to authorities will carry a maximum penalty of €7.5 million (US$10.9 million) or 1% of global revenue.